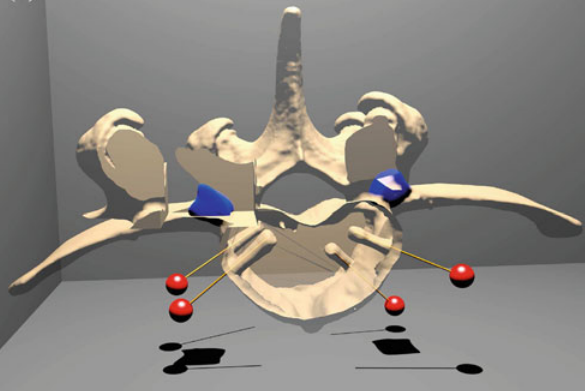

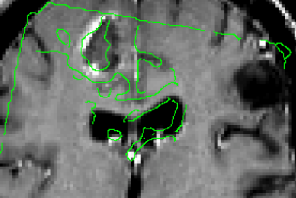

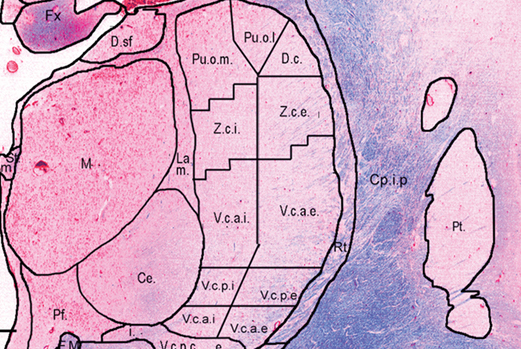

We have recently developed techniques [1] used to create a lower resolution 3D atlas, based on the Schaltenbrand and Wahren print atlas, which was integrated into a stereotactic neurosurgery planning and visualization platform (VIPER), and a higher resolution 3D atlas derived from a single set of manually segmented histological slices containing nuclei of the basal ganglia, thalamus, basal forebrain, and medial temporal lobe. We have therefore developed, and are continuing to validate, a high-resolution computerized MRI-integrated 3D histological atlas, which is useful in functional neurosurgery, and for functional and anatomical studies of the human basal ganglia, thalamus, and basal forebrain.

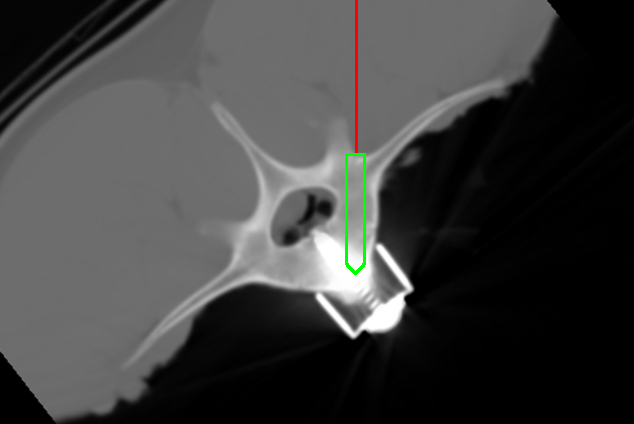

Parkinson’s disease (PD) is a neurodegenerative disorder that impairs the motor functions. Deep brain stimulation (DBS) is an effective therapy to treat drug-resistant PD. Accurate placement of the DBS electrode deep in the brain under stereotaxic conditions is key to successful surgery [2]. Accuracy depends on a number of factors including registration error of the stereotaxic frame, geometric distortion of the MRI scans and brain tissue shift during resulting from cerebrospinal fluid (CSF) leakage, cranial pressure change, and gravity after the burr-hole is opened.

By scanning through acoustic skull windows, transcranial ultrasound can provide non-invasive visualization of internal brain structures (i.e. midbrain, blood vessels, and certain nuclei like the substantia nigra) as well as metallic surgical instruments (i.e. DBS electrode and cannula). We believe that such images can be used to improve stereotaxic accuracy.

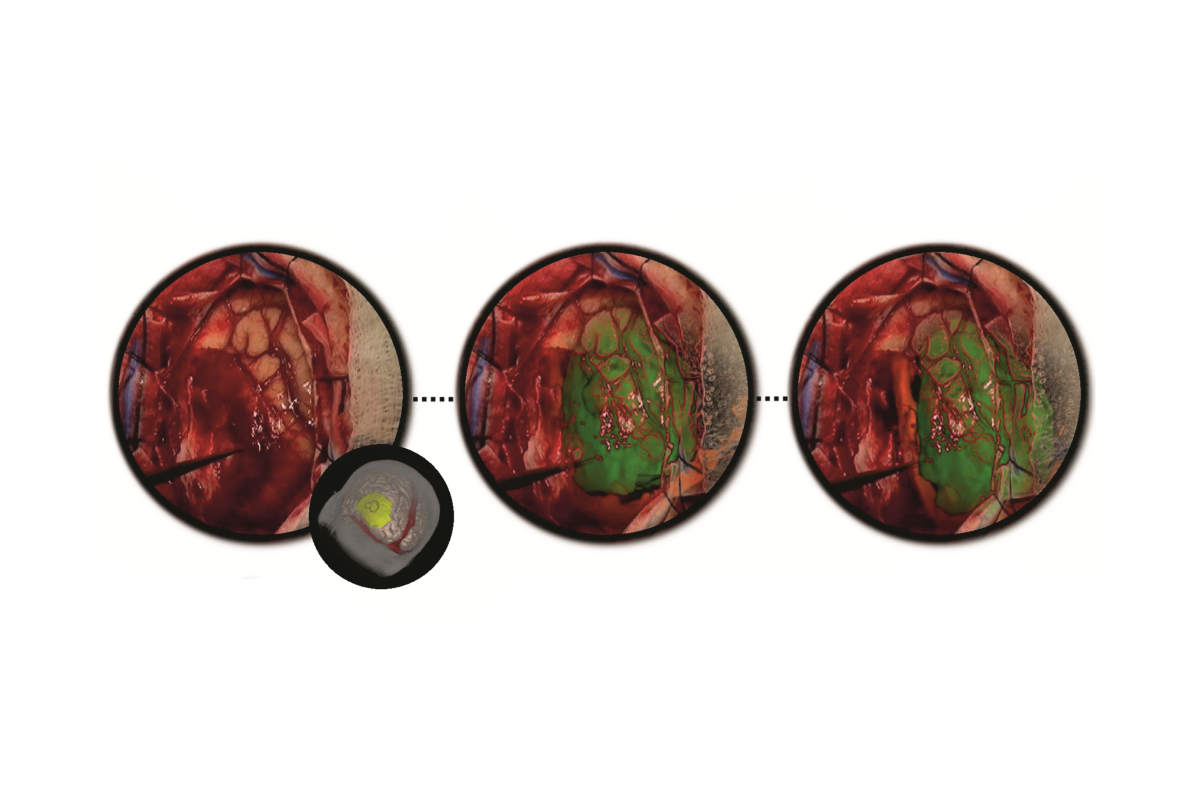

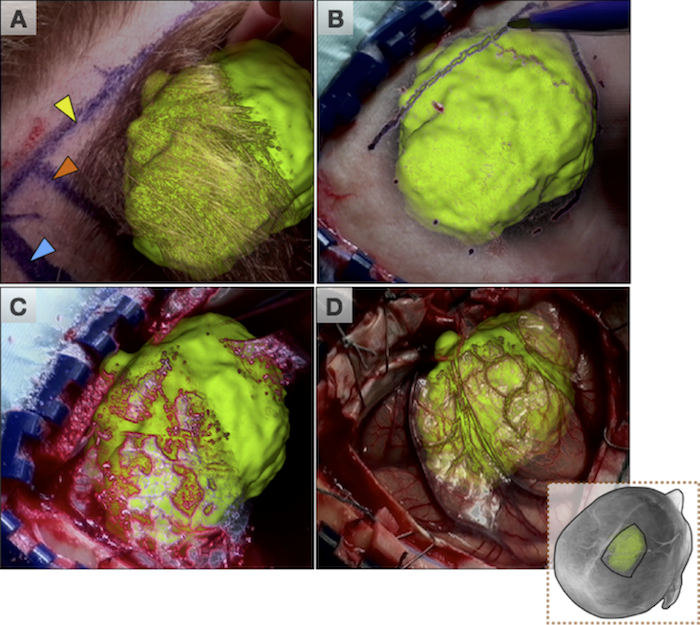

In the past decade, we have developed a prototype image-guided neuronavigation system called IBIS (Interactive Brain Imaging System) in our research laboratory, which enables the acquisition of intraoperative 2D/3D ultrasound, and addresses the issue of registration errors caused by brain shift by using ultrasound data to improve the patient/image alignment. By linking the preoperative MRI, and the corresponding surgical plan, to the transcranial ultrasound with appropriate registration methods, we will enable real-time monitoring of the DBS implantation and will improve the safety and accuracy of the procedure. Our goal is to acquire transcranial ultrasound images, and examine its performance as an intraoperative imaging modality.

The study, as well as surgical treatment of PD necessitate the delineation of basal ganglia nuclei morphology. Few automatic volumetric segmentation methods have attempted to identify the key brainstem substructures including the subthalamic nucleus (STN), substantia nigra (SN), and red nucleus (RN) due to their small size and poor contrast in conventional MRI. I recently developed a technique [3] with my Ph.D. student Yimming Xiao based on a dual-contrast patch-based label fusion method to segment the SN, STN, and RN. Our proposed method outperformed the state-of-the-art single-contrast patch-based method for segmenting brainstem nuclei using a multi-contrast multi-echo FLASH MRI sequence. This method is encouraging as it will provide promising developments for the treatment and research of PD. This study is supported by the NSERC/CIHR Collaborative Health Research Program

Reference

[1] A. F. Sadikot, M. M. Chakravarty, G. Bertrand, V. V Rymar, F. Al-Subaie and D. L. Collins. “Creation of computerized 3D MRI-integrated atlases of the human basal ganglia and thalamus”, Frontiers in Systems Neuroscience, 2011;5:71

[2] Y. Xiao, V.S. Fonov, S. Beriault, F. Al Soubaie, M.M. Chakravarty, A.F. Sadikot, G.B. Pike and D.L. Collins, “Multi-contrast unbiased MRI atlas of a Parkinson’s disease population”, Int J Comput Assist Radiol Surg. 2015 March; 10(3):329–41.

[3] Xiao Y, Fonov VS, Beriault S, Gerard I, Sadikot AF, Pike GB, Collins DL. Patch-based label fusion segmentation of brainstem structures with dual-contrast MRI for Parkinson’s disease. Int J Comput Assist Radiol Surg. 2015 July; 10(7):1029–41

[4] M.M. Chakravarty, G. Bertrand, C. Hodge, A.F. Sadikot, and D.L. Collins, “The creation of a brain atlas for image guided neurosurgery using serial histological data,” NeuroImage. 2006; 30(2): 359–76.