For intraoperative use, neuronavigation systems must relate the physical location of a patient with the preoperative models by means of a transformation that relates the two through a patient-to-image mapping. By tracking the patient and a set of specialized surgical tools, this mapping allows a surgeon to point to a specific location on the patient and see the corresponding anatomy on the patient specific models. However, throughout the intervention, hardware movement, an imperfect patient-image mapping, and movement of brain tissue during surgery invalidates the patient-to-image mapping. These sources of inaccuracy, collectivey described as ‘brain shift’, reduce the effectiveness of using preoperative patient specific models intraoperatively. Additionally, the surgeon is left with the cognitive burden of merging the virtual models of the patient with the visible and invisible physical anatomy.

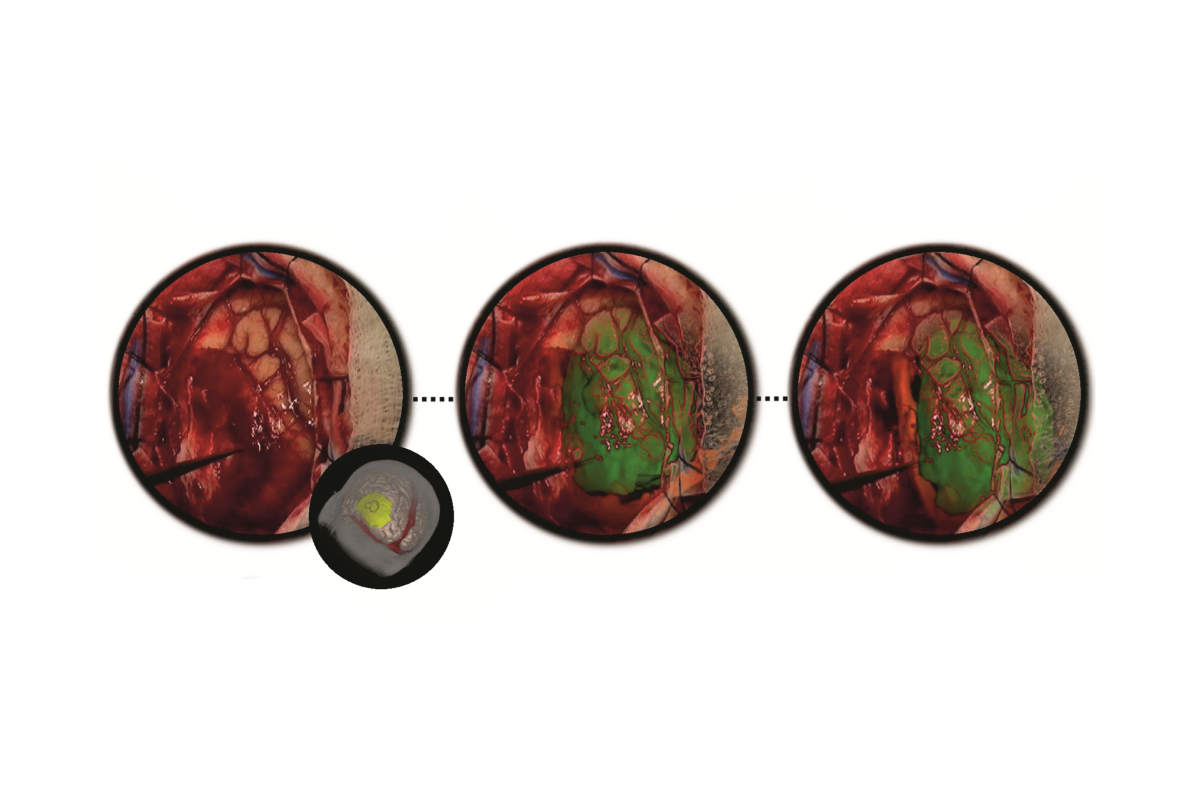

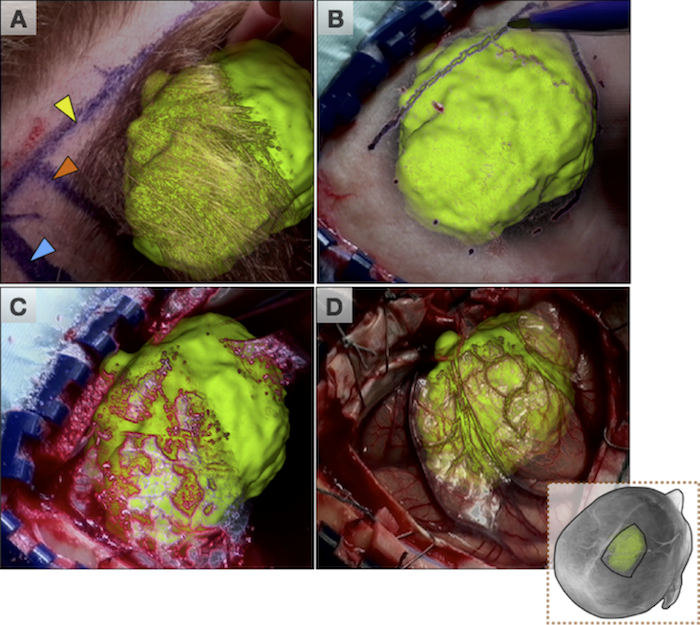

An underlying advantage of IBIS (IBIS Neuronav) is that it allows for both individual streams of research as well as the combination of different streams to overcome major or minor pitfalls within them. This is demonstrated through our combination of iUS and AR for improving the accuracy of AR visualizations during tumour neurosurgeries. With this combination of technologies, the interpretation difficulties associated with US images are mediated with detailed AR visualizations and the accuracy issues associated with AR are corrected through registration of the US images. This allows for improved patient-specific planning intra-operatively by both prolonging the reliable use of neuronavigation and the understanding of complex three dimensional medical imaging data so that different surgical strategies can be adapted when necessary.

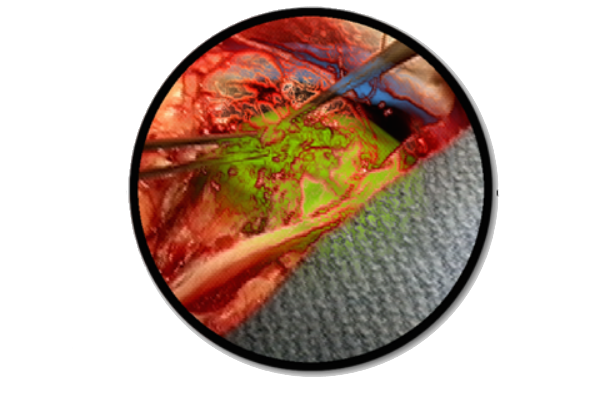

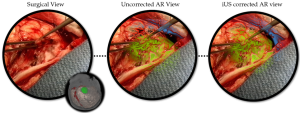

The avatar represents the orientation of the patient’s head. The surgical field of view (left), the AR view before US correction where the tumour seems to conform unnaturally to the surrounding tissue (middle), and the brain shift corrected AR view where the tumour visualization now lines up naturally with surrounding tissue and can be used for accurate intra-operative planning.

Publications

[1] Gerard, Ian J., Marta Kersten-Oertel, Simon Drouin, Jeffery A. Hall, Kevin Petrecca, Dante De Nigris, Tal Arbel, and D. Louis Collins. “Improving Patient Specific Neurosurgical Models with Intraoperative Ultrasound and Augmented Reality Visualizations in a Neuronavigation Environment.” InClinical Image-Based Procedures. Translational Research in Medical Imaging, pp. 28-35. Springer International Publishing, 2015.

[2] Gerard, Ian J., Marta Kersten-Oertel, Simon Drouin, Jeffery A. Hall, Kevin Petrecca, Dante De Nigris, Tal Arbel, and D. Louis Collins. “Improving Augmented Reality Tumour Visualization With Intraoperative Ultrasound In Image Guided Neurosurgery: Case Report.” International Journal of Radiology and Surgery 10(S1):1-312, 2015.